Following on last week’s

egregious discussion of the Hoot Scheme-to-WebAssembly compiler bootie,

today I would like to examine another axis of boot, which is a kind of

rebased branch of history: not the hack as it happened, but the logic inside the

hack, the structure of the built thing, the history as it might have been. Instead of describing the layers of shims and props that we used while discovering what were building, let’s look at how we would build Hoot again, if we had to.

I think many readers of this blog will have seen Growing a

Language, a talk /

performance art piece in which Guy L. Steele—I once mentioned to him

that Guy L. was one of the back-justifications for the name Guile; he

did not take it well—in which Steele takes the set of monosyllabic words as primitives

and builds up a tower of terms on top, bootstrapping a language as he

goes. I just watched it again and I think it holds up, probably well

enough to forgive the superfluous presence of the gender binary in the

intro; ideas were different in the 1900s.

It is in the sense of that talk that I would like to look at growing a

Hoot: how Hoot defines nouns and verbs in terms of smaller, more

primitive terms: terms in terms of terms.

(hoot features)

features

(hoot primitives)

primitives

(ice-9 match)

match

(ice-9 match):s->(hoot primitives):n

(hoot eq)

eq

(ice-9 match):s->(hoot eq):n

(hoot pairs)

pairs

(ice-9 match):s->(hoot pairs):n

(hoot vectors)

vectors

(ice-9 match):s->(hoot vectors):n

(hoot equal)

equal

(ice-9 match):s->(hoot equal):n

(hoot lists)

lists

(ice-9 match):s->(hoot lists):n

(hoot errors)

errors

(ice-9 match):s->(hoot errors):n

(hoot numbers)

numbers

(ice-9 match):s->(hoot numbers):n

(fibers scheduler)

scheduler

(hoot ffi)

ffi

(fibers scheduler):s->(hoot ffi):n

(guile)

(guile)

(fibers scheduler):s->(guile):n

(fibers channels)

channels

(fibers channels):s->(ice-9 match):n

(fibers waiter-queue)

waiter-queue

(fibers channels):s->(fibers waiter-queue):n

(fibers operations)

operations

(fibers channels):s->(fibers operations):n

(fibers channels):s->(guile):n

(srfi srfi-9)

srfi-9

(fibers channels):s->(srfi srfi-9):n

(fibers waiter-queue):s->(ice-9 match):n

(fibers waiter-queue):s->(fibers operations):n

(fibers waiter-queue):s->(guile):n

(fibers waiter-queue):s->(srfi srfi-9):n

(fibers promises)

promises

(fibers promises):s->(fibers operations):n

(hoot exceptions)

exceptions

(fibers promises):s->(hoot exceptions):n

(fibers promises):s->(hoot ffi):n

(fibers promises):s->(guile):n

(fibers conditions)

conditions

(fibers conditions):s->(ice-9 match):n

(fibers conditions):s->(fibers waiter-queue):n

(fibers conditions):s->(fibers operations):n

(fibers conditions):s->(guile):n

(fibers conditions):s->(srfi srfi-9):n

(fibers timers)

timers

(fibers timers):s->(fibers scheduler):n

(fibers timers):s->(fibers operations):n

(scheme time)

time

(fibers timers):s->(scheme time):n

(fibers timers):s->(guile):n

(fibers operations):s->(ice-9 match):n

(fibers operations):s->(fibers scheduler):n

(hoot boxes)

boxes

(fibers operations):s->(hoot boxes):n

(fibers operations):s->(guile):n

(fibers operations):s->(srfi srfi-9):n

(hoot eq):s->(hoot primitives):n

(hoot syntax)

syntax

(hoot eq):s->(hoot syntax):n

(hoot strings)

strings

(hoot strings):s->(hoot primitives):n

(hoot strings):s->(hoot eq):n

(hoot strings):s->(hoot pairs):n

(hoot bytevectors)

bytevectors

(hoot strings):s->(hoot bytevectors):n

(hoot strings):s->(hoot lists):n

(hoot bitwise)

bitwise

(hoot strings):s->(hoot bitwise):n

(hoot char)

char

(hoot strings):s->(hoot char):n

(hoot strings):s->(hoot errors):n

(hoot strings):s->(hoot numbers):n

(hoot match)

match

(hoot strings):s->(hoot match):n

(hoot pairs):s->(hoot primitives):n

(hoot bitvectors)

bitvectors

(hoot bitvectors):s->(hoot primitives):n

(hoot bitvectors):s->(hoot bitwise):n

(hoot bitvectors):s->(hoot errors):n

(hoot bitvectors):s->(hoot match):n

(hoot vectors):s->(hoot primitives):n

(hoot vectors):s->(hoot pairs):n

(hoot vectors):s->(hoot lists):n

(hoot vectors):s->(hoot errors):n

(hoot vectors):s->(hoot numbers):n

(hoot vectors):s->(hoot match):n

(hoot equal):s->(hoot primitives):n

(hoot equal):s->(hoot eq):n

(hoot equal):s->(hoot strings):n

(hoot equal):s->(hoot pairs):n

(hoot equal):s->(hoot bitvectors):n

(hoot equal):s->(hoot vectors):n

(hoot records)

records

(hoot equal):s->(hoot records):n

(hoot equal):s->(hoot bytevectors):n

(hoot not)

not

(hoot equal):s->(hoot not):n

(hoot values)

values

(hoot equal):s->(hoot values):n

(hoot hashtables)

hashtables

(hoot equal):s->(hoot hashtables):n

(hoot equal):s->(hoot numbers):n

(hoot equal):s->(hoot boxes):n

(hoot equal):s->(hoot match):n

(hoot exceptions):s->(hoot features):n

(hoot exceptions):s->(hoot primitives):n

(hoot exceptions):s->(hoot pairs):n

(hoot exceptions):s->(hoot records):n

(hoot exceptions):s->(hoot lists):n

(hoot exceptions):s->(hoot syntax):n

(hoot exceptions):s->(hoot errors):n

(hoot exceptions):s->(hoot match):n

(hoot cond-expand)

cond-expand

(hoot exceptions):s->(hoot cond-expand):n

(hoot parameters)

parameters

(hoot parameters):s->(hoot primitives):n

(hoot fluids)

fluids

(hoot parameters):s->(hoot fluids):n

(hoot parameters):s->(hoot errors):n

(hoot parameters):s->(hoot cond-expand):n

(hoot records):s->(hoot primitives):n

(hoot records):s->(hoot eq):n

(hoot records):s->(hoot pairs):n

(hoot records):s->(hoot vectors):n

(hoot symbols)

symbols

(hoot records):s->(hoot symbols):n

(hoot records):s->(hoot lists):n

(hoot records):s->(hoot values):n

(hoot records):s->(hoot bitwise):n

(hoot records):s->(hoot errors):n

(hoot ports)

ports

(hoot records):s->(hoot ports):n

(hoot records):s->(hoot numbers):n

(hoot records):s->(hoot match):n

(hoot keywords)

keywords

(hoot records):s->(hoot keywords):n

(hoot records):s->(hoot cond-expand):n

(hoot dynamic-wind)

dynamic-wind

(hoot dynamic-wind):s->(hoot primitives):n

(hoot dynamic-wind):s->(hoot syntax):n

(hoot bytevectors):s->(hoot primitives):n

(hoot bytevectors):s->(hoot bitwise):n

(hoot bytevectors):s->(hoot errors):n

(hoot bytevectors):s->(hoot match):n

(hoot error-handling)

error-handling

(hoot error-handling):s->(hoot primitives):n

(hoot error-handling):s->(hoot pairs):n

(hoot error-handling):s->(hoot exceptions):n

(hoot write)

write

(hoot error-handling):s->(hoot write):n

(hoot control)

control

(hoot error-handling):s->(hoot control):n

(hoot error-handling):s->(hoot fluids):n

(hoot error-handling):s->(hoot errors):n

(hoot error-handling):s->(hoot ports):n

(hoot error-handling):s->(hoot numbers):n

(hoot error-handling):s->(hoot match):n

(hoot error-handling):s->(hoot cond-expand):n

(hoot ffi):s->(hoot primitives):n

(hoot ffi):s->(hoot strings):n

(hoot ffi):s->(hoot pairs):n

(hoot procedures)

procedures

(hoot ffi):s->(hoot procedures):n

(hoot ffi):s->(hoot lists):n

(hoot ffi):s->(hoot not):n

(hoot ffi):s->(hoot errors):n

(hoot ffi):s->(hoot numbers):n

(hoot ffi):s->(hoot cond-expand):n

(hoot debug)

debug

(hoot debug):s->(hoot primitives):n

(hoot debug):s->(hoot match):n

(hoot symbols):s->(hoot primitives):n

(hoot symbols):s->(hoot errors):n

(hoot assoc)

assoc

(hoot assoc):s->(hoot primitives):n

(hoot assoc):s->(hoot eq):n

(hoot assoc):s->(hoot pairs):n

(hoot assoc):s->(hoot equal):n

(hoot assoc):s->(hoot lists):n

(hoot assoc):s->(hoot not):n

(hoot procedures):s->(hoot primitives):n

(hoot procedures):s->(hoot syntax):n

(hoot write):s->(hoot primitives):n

(hoot write):s->(hoot eq):n

(hoot write):s->(hoot strings):n

(hoot write):s->(hoot pairs):n

(hoot write):s->(hoot bitvectors):n

(hoot write):s->(hoot vectors):n

(hoot write):s->(hoot records):n

(hoot write):s->(hoot bytevectors):n

(hoot write):s->(hoot symbols):n

(hoot write):s->(hoot procedures):n

(hoot write):s->(hoot bitwise):n

(hoot write):s->(hoot char):n

(hoot write):s->(hoot errors):n

(hoot write):s->(hoot ports):n

(hoot write):s->(hoot numbers):n

(hoot write):s->(hoot keywords):n

(hoot lists):s->(hoot primitives):n

(hoot lists):s->(hoot pairs):n

(hoot lists):s->(hoot values):n

(hoot lists):s->(hoot numbers):n

(hoot lists):s->(hoot match):n

(hoot lists):s->(hoot cond-expand):n

(hoot not):s->(hoot syntax):n

(hoot syntax):s->(hoot primitives):n

(hoot values):s->(hoot primitives):n

(hoot values):s->(hoot syntax):n

(hoot control):s->(hoot primitives):n

(hoot control):s->(hoot parameters):n

(hoot control):s->(hoot values):n

(hoot control):s->(hoot cond-expand):n

(hoot bitwise):s->(hoot primitives):n

(hoot char):s->(hoot primitives):n

(hoot char):s->(hoot bitvectors):n

(hoot char):s->(hoot bitwise):n

(hoot char):s->(hoot errors):n

(hoot char):s->(hoot match):n

(hoot dynamic-states)

dynamic-states

(hoot dynamic-states):s->(hoot primitives):n

(hoot dynamic-states):s->(hoot vectors):n

(hoot dynamic-states):s->(hoot debug):n

(hoot dynamic-states):s->(hoot lists):n

(hoot dynamic-states):s->(hoot values):n

(hoot dynamic-states):s->(hoot errors):n

(hoot dynamic-states):s->(hoot numbers):n

(hoot dynamic-states):s->(hoot match):n

(hoot read)

read

(hoot read):s->(hoot primitives):n

(hoot read):s->(hoot eq):n

(hoot read):s->(hoot strings):n

(hoot read):s->(hoot pairs):n

(hoot read):s->(hoot bitvectors):n

(hoot read):s->(hoot vectors):n

(hoot read):s->(hoot exceptions):n

(hoot read):s->(hoot symbols):n

(hoot read):s->(hoot lists):n

(hoot read):s->(hoot not):n

(hoot read):s->(hoot values):n

(hoot read):s->(hoot char):n

(hoot read):s->(hoot errors):n

(hoot read):s->(hoot ports):n

(hoot read):s->(hoot numbers):n

(hoot read):s->(hoot match):n

(hoot read):s->(hoot keywords):n

(hoot hashtables):s->(hoot primitives):n

(hoot hashtables):s->(hoot eq):n

(hoot hashtables):s->(hoot pairs):n

(hoot hashtables):s->(hoot vectors):n

(hoot hashtables):s->(hoot procedures):n

(hoot hashtables):s->(hoot lists):n

(hoot hashtables):s->(hoot values):n

(hoot hashtables):s->(hoot bitwise):n

(hoot hashtables):s->(hoot errors):n

(hoot hashtables):s->(hoot numbers):n

(hoot fluids):s->(hoot primitives):n

(hoot fluids):s->(hoot cond-expand):n

(hoot errors):s->(hoot primitives):n

(hoot atomics)

atomics

(hoot atomics):s->(hoot primitives):n

(hoot ports):s->(hoot primitives):n

(hoot ports):s->(hoot eq):n

(hoot ports):s->(hoot strings):n

(hoot ports):s->(hoot pairs):n

(hoot ports):s->(hoot vectors):n

(hoot ports):s->(hoot parameters):n

(hoot ports):s->(hoot bytevectors):n

(hoot ports):s->(hoot procedures):n

(hoot ports):s->(hoot lists):n

(hoot ports):s->(hoot not):n

(hoot ports):s->(hoot values):n

(hoot ports):s->(hoot bitwise):n

(hoot ports):s->(hoot char):n

(hoot ports):s->(hoot errors):n

(hoot ports):s->(hoot numbers):n

(hoot ports):s->(hoot boxes):n

(hoot ports):s->(hoot match):n

(hoot ports):s->(hoot cond-expand):n

(hoot numbers):s->(hoot primitives):n

(hoot numbers):s->(hoot eq):n

(hoot numbers):s->(hoot not):n

(hoot numbers):s->(hoot values):n

(hoot numbers):s->(hoot bitwise):n

(hoot numbers):s->(hoot errors):n

(hoot numbers):s->(hoot match):n

(hoot boxes):s->(hoot primitives):n

(hoot match):s->(hoot primitives):n

(hoot match):s->(hoot errors):n

(hoot keywords):s->(hoot primitives):n

(hoot cond-expand):s->(hoot features):n

(hoot cond-expand):s->(hoot primitives):n

(scheme lazy)

lazy

(scheme lazy):s->(hoot primitives):n

(scheme lazy):s->(hoot records):n

(scheme lazy):s->(hoot match):n

(scheme base)

base

(scheme lazy):s->(scheme base):n

(scheme load)

load

(scheme load):s->(hoot primitives):n

(scheme load):s->(hoot errors):n

(scheme load):s->(scheme base):n

(scheme complex)

complex

(scheme complex):s->(hoot numbers):n

(scheme time):s->(hoot primitives):n

(scheme time):s->(scheme base):n

(scheme file)

file

(scheme file):s->(hoot primitives):n

(scheme file):s->(hoot errors):n

(scheme file):s->(hoot ports):n

(scheme file):s->(hoot match):n

(scheme file):s->(scheme base):n

(scheme write)

write

(scheme write):s->(hoot write):n

(scheme eval)

eval

(scheme eval):s->(hoot errors):n

(scheme eval):s->(scheme base):n

(scheme inexact)

inexact

(scheme inexact):s->(hoot primitives):n

(scheme inexact):s->(hoot numbers):n

(scheme char)

char

(scheme char):s->(hoot primitives):n

(scheme char):s->(hoot bitwise):n

(scheme char):s->(hoot char):n

(scheme char):s->(hoot numbers):n

(scheme char):s->(scheme base):n

(scheme process-context)

process-context

(scheme process-context):s->(hoot primitives):n

(scheme process-context):s->(hoot errors):n

(scheme process-context):s->(scheme base):n

(scheme cxr)

cxr

(scheme cxr):s->(hoot pairs):n

(scheme read)

read

(scheme read):s->(hoot read):n

(scheme base):s->(hoot features):n

(scheme base):s->(hoot primitives):n

(scheme base):s->(hoot eq):n

(scheme base):s->(hoot strings):n

(scheme base):s->(hoot pairs):n

(scheme base):s->(hoot vectors):n

(scheme base):s->(hoot equal):n

(scheme base):s->(hoot exceptions):n

(scheme base):s->(hoot parameters):n

(scheme base):s->(hoot dynamic-wind):n

(scheme base):s->(hoot bytevectors):n

(scheme base):s->(hoot error-handling):n

(scheme base):s->(hoot symbols):n

(scheme base):s->(hoot assoc):n

(scheme base):s->(hoot procedures):n

(scheme base):s->(hoot write):n

(scheme base):s->(hoot lists):n

(scheme base):s->(hoot not):n

(scheme base):s->(hoot syntax):n

(scheme base):s->(hoot values):n

(scheme base):s->(hoot control):n

(scheme base):s->(hoot char):n

(scheme base):s->(hoot read):n

(scheme base):s->(hoot errors):n

(scheme base):s->(hoot ports):n

(scheme base):s->(hoot numbers):n

(scheme base):s->(hoot match):n

(scheme base):s->(hoot cond-expand):n

(scheme base):s->(srfi srfi-9):n

(scheme repl)

repl

(scheme repl):s->(hoot errors):n

(scheme repl):s->(scheme base):n

(scheme r5rs)

r5rs

(scheme r5rs):s->(scheme lazy):n

(scheme r5rs):s->(scheme load):n

(scheme r5rs):s->(scheme complex):n

(scheme r5rs):s->(scheme file):n

(scheme r5rs):s->(scheme write):n

(scheme r5rs):s->(scheme eval):n

(scheme r5rs):s->(scheme inexact):n

(scheme r5rs):s->(scheme char):n

(scheme r5rs):s->(scheme process-context):n

(scheme r5rs):s->(scheme cxr):n

(scheme r5rs):s->(scheme read):n

(scheme r5rs):s->(scheme base):n

(scheme r5rs):s->(scheme repl):n

(scheme case-lambda)

case-lambda

(scheme case-lambda):s->(hoot primitives):n

(fibers)

(fibers)

(fibers):s->(fibers scheduler):n

(fibers):s->(guile):n

(guile):s->(hoot features):n

(guile):s->(hoot primitives):n

(guile):s->(ice-9 match):n

(guile):s->(hoot eq):n

(guile):s->(hoot strings):n

(guile):s->(hoot pairs):n

(guile):s->(hoot bitvectors):n

(guile):s->(hoot vectors):n

(guile):s->(hoot equal):n

(guile):s->(hoot exceptions):n

(guile):s->(hoot parameters):n

(guile):s->(hoot dynamic-wind):n

(guile):s->(hoot bytevectors):n

(guile):s->(hoot error-handling):n

(guile):s->(hoot symbols):n

(guile):s->(hoot assoc):n

(guile):s->(hoot procedures):n

(guile):s->(hoot write):n

(guile):s->(hoot lists):n

(guile):s->(hoot not):n

(guile):s->(hoot syntax):n

(guile):s->(hoot values):n

(guile):s->(hoot control):n

(guile):s->(hoot bitwise):n

(guile):s->(hoot char):n

(guile):s->(hoot dynamic-states):n

(guile):s->(hoot read):n

(guile):s->(hoot fluids):n

(guile):s->(hoot errors):n

(guile):s->(hoot ports):n

(guile):s->(hoot numbers):n

(guile):s->(hoot boxes):n

(guile):s->(hoot keywords):n

(guile):s->(hoot cond-expand):n

(guile):s->(scheme lazy):n

(guile):s->(scheme time):n

(guile):s->(scheme file):n

(guile):s->(scheme char):n

(guile):s->(scheme process-context):n

(guile):s->(scheme base):n

(guile):s->(srfi srfi-9):n

(srfi srfi-9):s->(hoot primitives):n

(srfi srfi-9):s->(hoot records):n

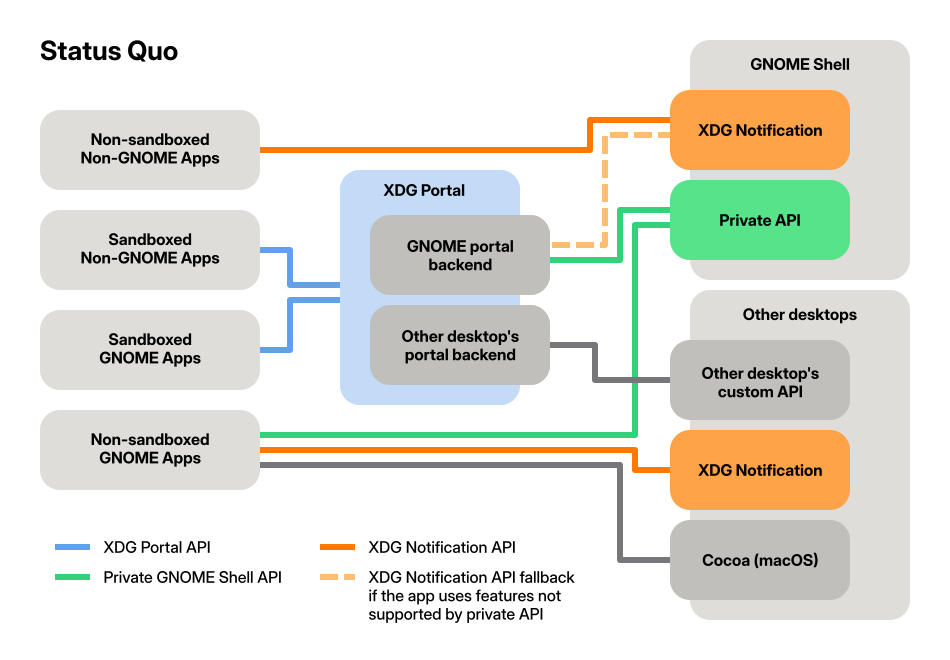

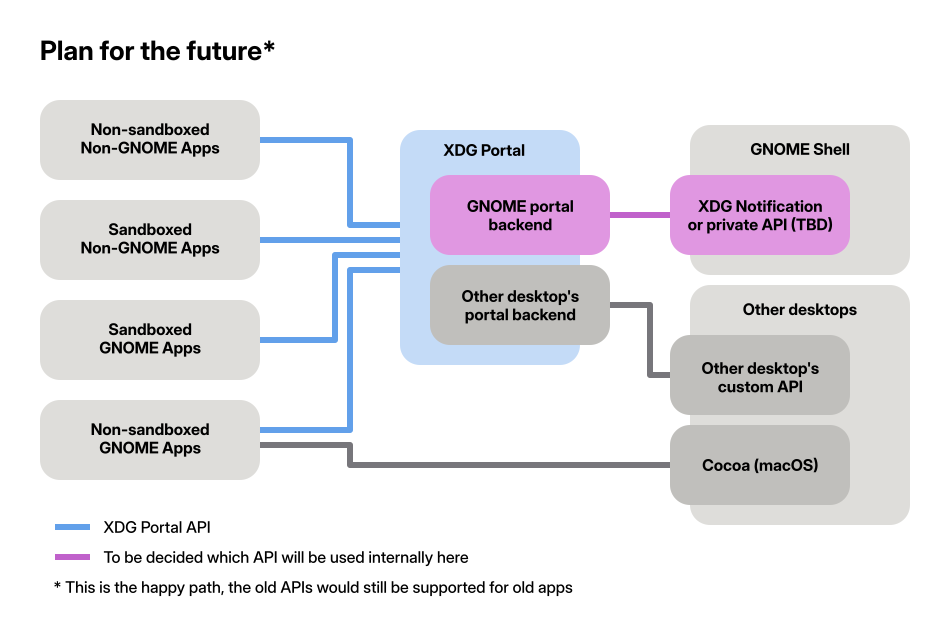

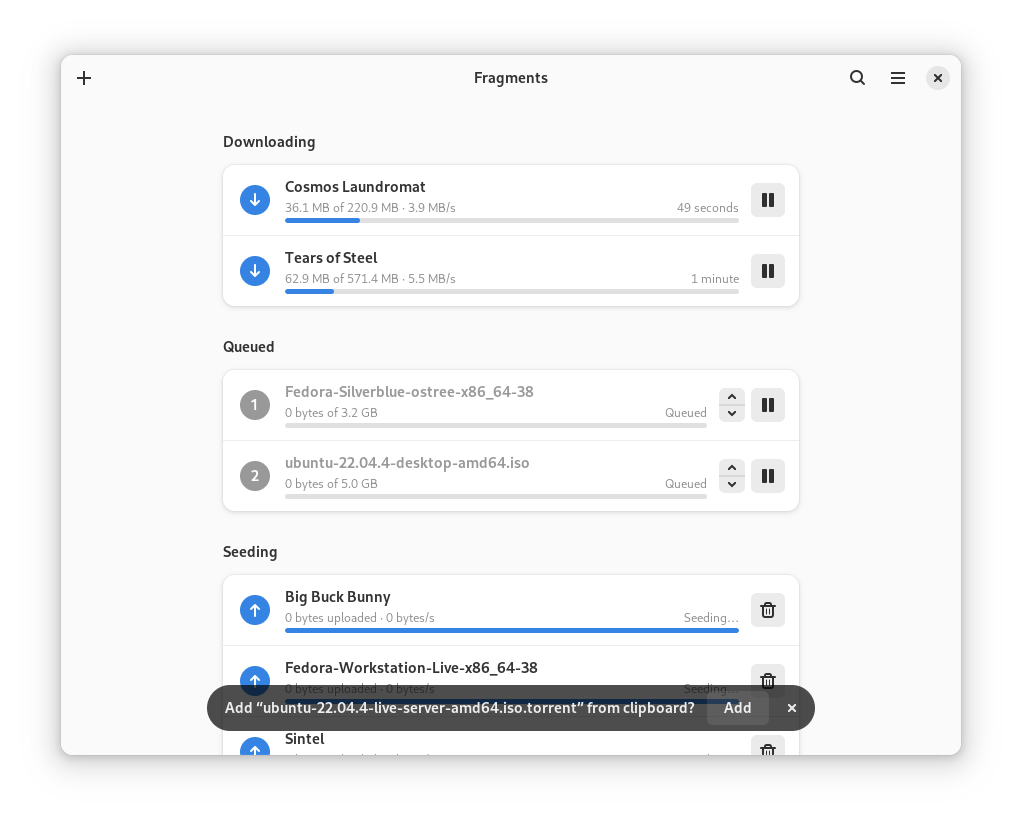

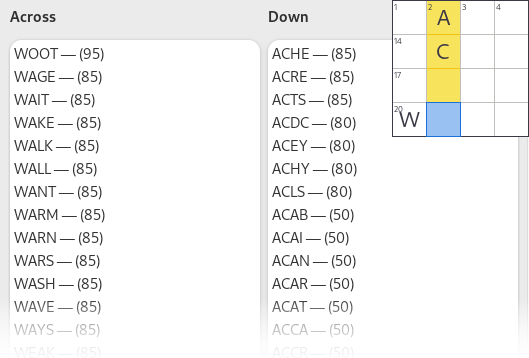

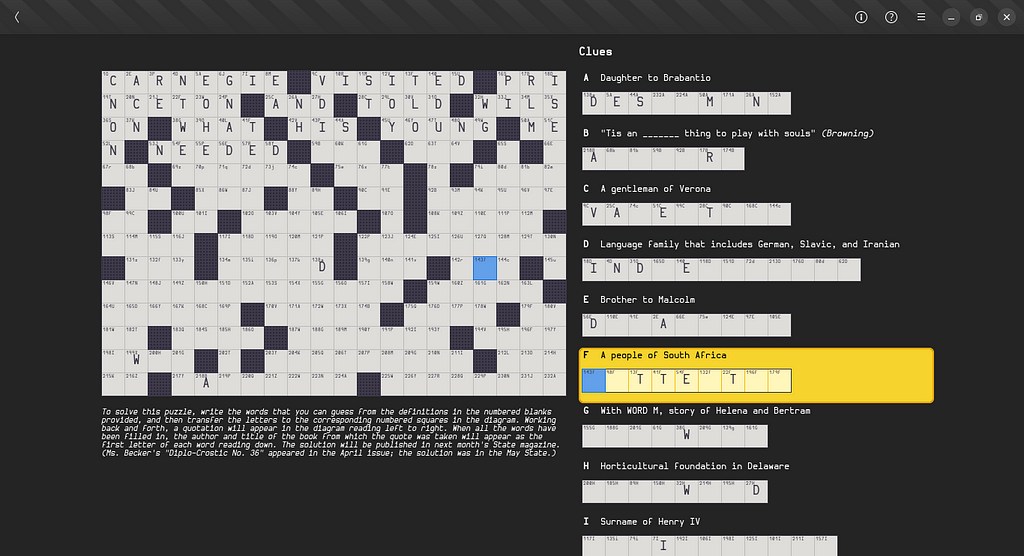

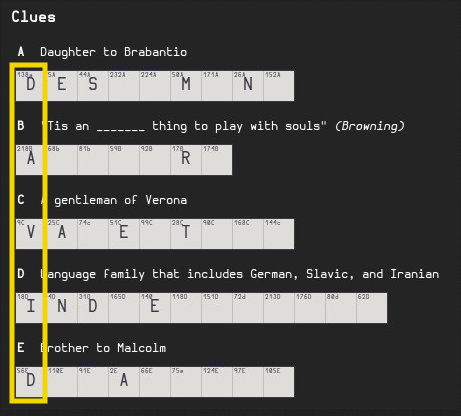

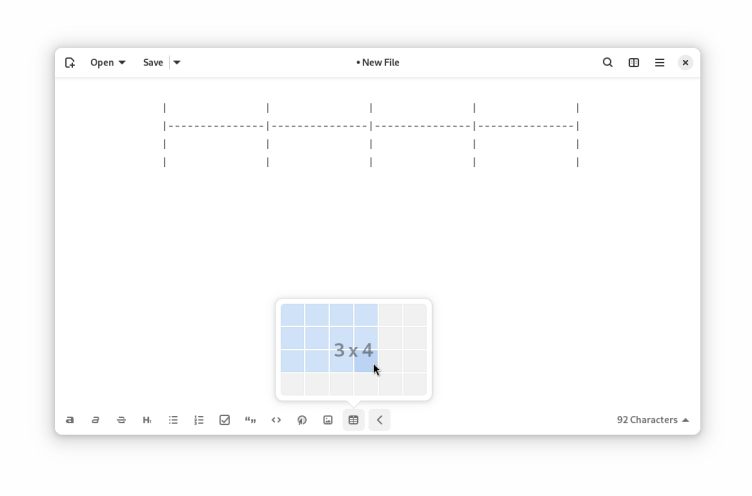

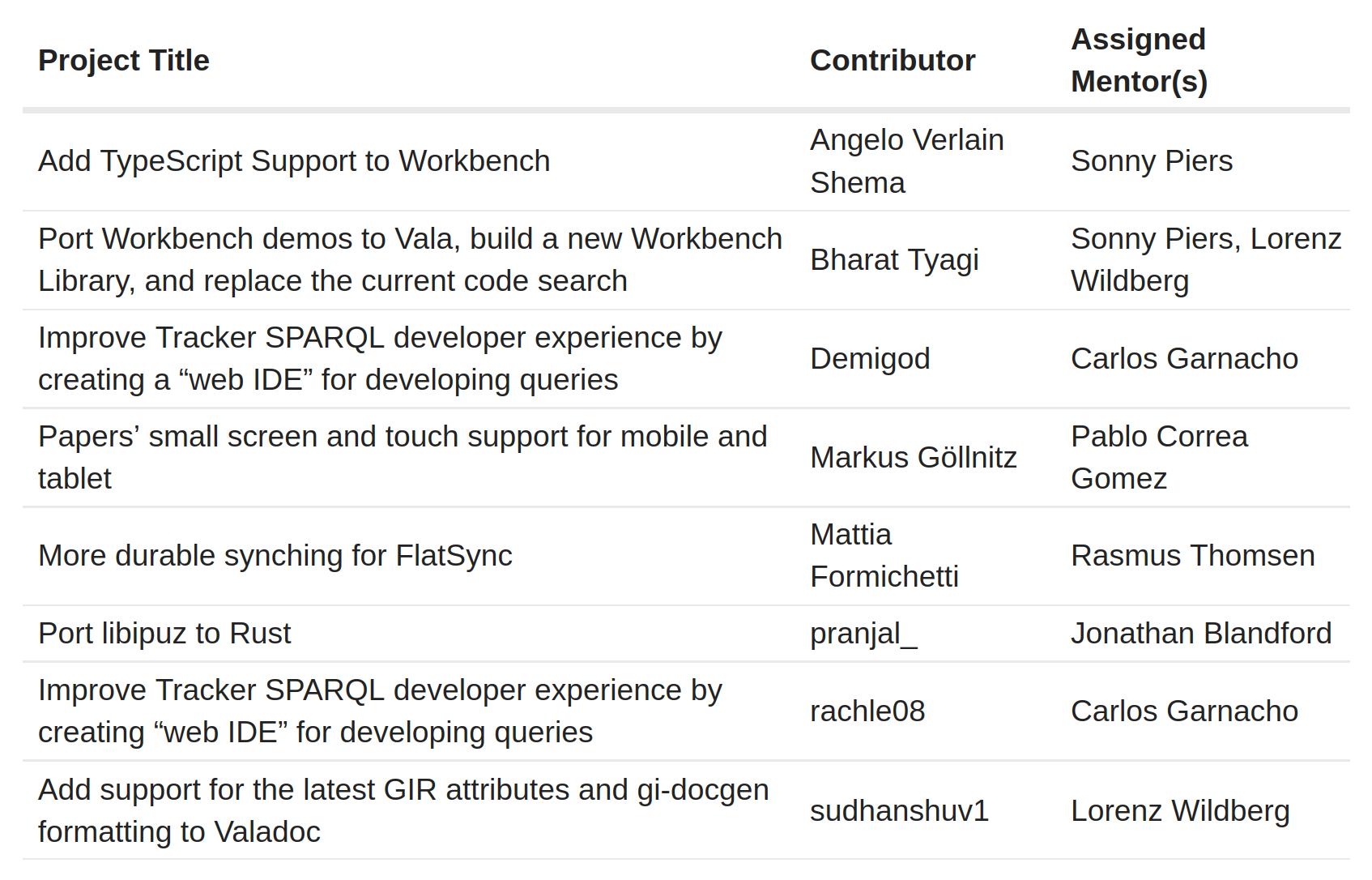

If you are reading this on the web, you should see above a graph of

dependencies among the 50 or so libraries that are shipped as part of

Hoot. (Somehow I doubt that a feed reader will plumb through the inline

SVG, but who knows.) It’s a bit of a mess, but still I think it’s a

useful illustration of a number of properties of how the Hoot language

is grown from small to large. Click on any box to visit the source code for that module.

the root of the boot

Firstly, let us note that the graph is not a forest: it is a single

tree. There is no module that does not depend (possibly indirectly) on

(hoot primitives). This is because there are no capabilities that

Hoot libraries can access without importing them, and the only way into

the Hootosphere from outside is via the definitions in the primitives

module.

So what are these definitions, you might ask? Well, these are the

“well-known” bindings, for example + for which the compiler might have

some special understanding, the sort of binding that gets translated to

a primitive operation at the compiler IR level. They are used in

careful ways by the modules that use (hoot primitives) to ensure that

their uses are all open-coded by the compiler. (“Open coding” is

inlining. But inlining to me implies that the whole implementation is

inlined, with no slow-path callouts, whereas open coding implies to me

that it’s the compiler that knows what the op does and may or may not

inline the actual asm.)

But, (hoot primitives) also exposes some other definitions, for

example define and let and lambda and all that. Scheme doesn’t

have keywords in the sense that Python has def and with and such:

there is no privileged way to associate a name with its meaning. It is

in this sense that it is impossible to avoid (hoot primitives): the

most simple (define x 42) depends on the lexical meaning of define,

which is provided by the primitives module.

Syntax definitions are an expander construct; they are not present at

run-time. Using a syntax definition causes the expander to invoke code,

and the expander runs on the host system, which is Guile and not

WebAssembly. So, syntax definitions belong to the host. This goes also

for some first-order definitions such as syntax->datum and so on,

which are only used in syntax expanders; these definitions are plumbed

through (hoot primitives), but can only ever be used by macro

definitions, which run on the meta-level.

(Is this too heavy? Allow me to lighten the mood: when I was 22 or so

and working in Namibia, I somehow got an advance copy of Notes from the

Metalevel. I was working on

algorithmic music synthesis, and my chief strategy was knocking hubris

together with itself, as one does. I sent the author a bunch of

uninvited corrections to his book. I think it was completely unwelcome!

Anyway, moral of the story, at 22 you get a free pass to do whatever you

want, and come to think of it, now that I am 44 I think I should get

some kind of hubris loyalty award or something.)

powerful primitives

So, there are expand-time primitives and run-time primitives. The

expander knows about expand-time primitives and the compiler knows about

run-time primitives. One particularly powerful primitive is

%inline-wasm, which takes an inline snippet of WebAssembly as an

s-expression and applies it to a number of arguments passed at run-time.

Consider

make-bytevector:

(define* (make-bytevector len #:optional (init 0))

(%inline-wasm

'(func (param $len i32) (param $init i32)

(result (ref eq))

(struct.new

$mutable-bytevector

(i32.const 0)

(array.new $raw-bytevector

(local.get $init)

(local.get $len))))

len init))

We have an inline snippet of wasm that makes a $mutable-bytevector.

It passes 0 as the hash field, meaning that the hashq of this value

will be lazily initialized, and the contents are a new array of a given

size and initial value. Inputs will be unboxed to the appropriate type

(two i32s in this case), and likewise with outputs; here we produce

the universal (ref eq) representation.

The nice thing about %inline-wasm is that the compiler didn’t have to

be taught about make-bytevector: this definition suffices, because

%inline-wasm can access a number of lower-level capabilities.

dual denotations

But as we learned in my notes on whole-program

compilation,

any run-time definition is available at compile-time, if it is reachable

from a syntax transformer. So this definition above isn’t quite

sufficient; we can’t call make-bytevector as part of a procedural

macro, which we might want to do. What we need instead is to provide

one definition when residualizing wasm at run-time, and another when

loading a module at expand-time.

In Hoot we do this with cond-expand, where we expand to %inline-wasm

when targetting Hoot, and... what, precisely, at expand-time? Really we

need to make a Guile bytevector, so in this sort of case, we end up

having to include a run-time make-bytevector definition in the (hoot primitives) module. This happens whereever we end up using

%inline-wasm.

building to guile

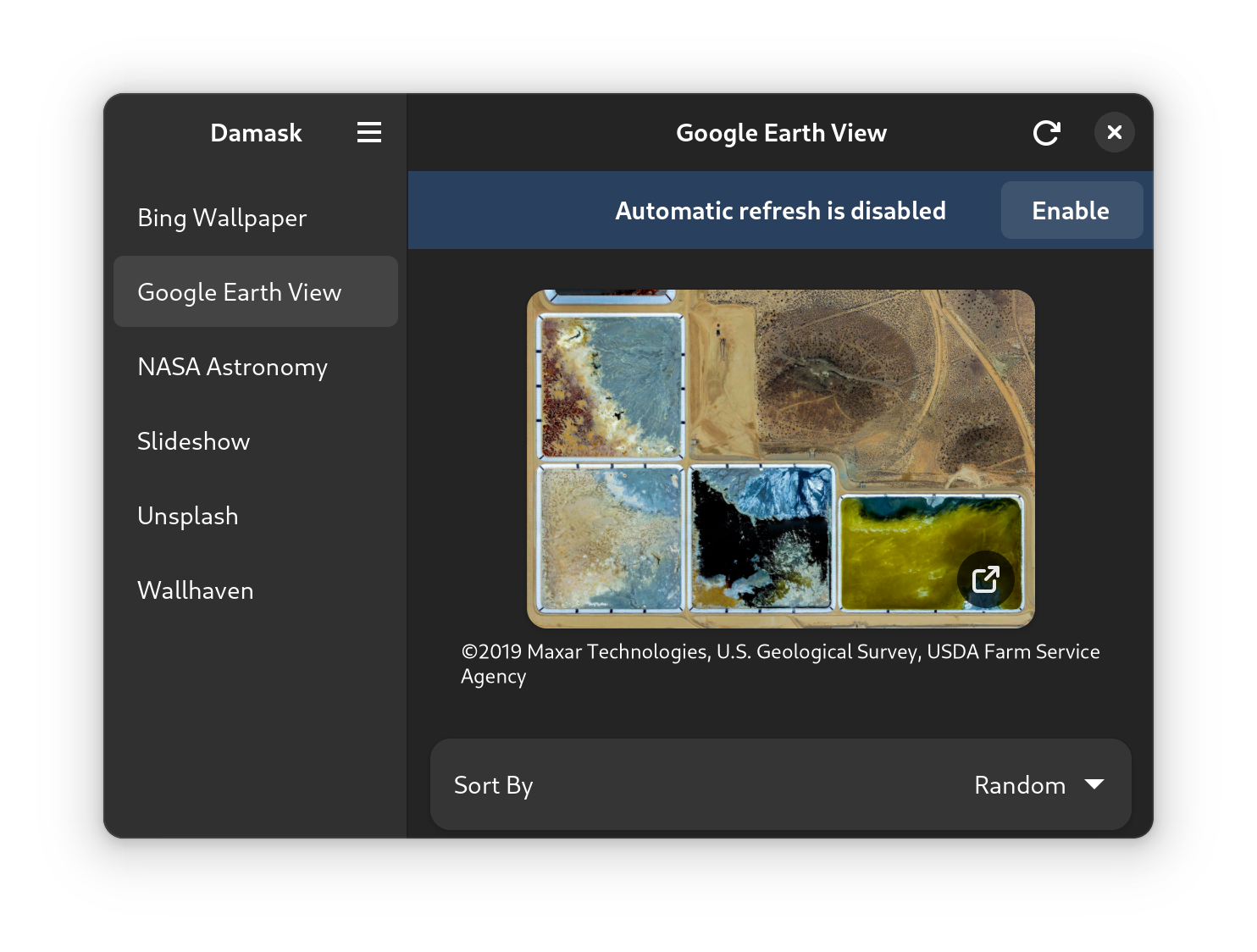

Returning to our graph, we see that there is a red-colored block for

Hoot modules, a teal-colored layer on top for those modules that are

defined by R7RS, a few oddballs, and then (guile) and Fibers built on

top. The

(guile) module provides a shim that implements Guile’s own default set

of bindings, allowing Guile modules to be loaded on a Hoot system.

(guile) is layered on top of the low-level Hoot libraries, and out of

convenience, on top of the various R7RS libraries as well, because it

was easiest to remember what was where in R7RS than our ad-hoc nest of

Hoot internal libraries.

Having (guile) lets Guile hackers build on Hoot. It’s still

incomplete but I think eventually it will be capital-G Good. Even for a

library that needed more porting like Fibers (Hoot has no threads so

much of the parallel concurrent ML implementation can be simplified, and

we use an event loop from the Wasm run-time instead of an epoll-based

scheduler), it was still pleasant to be able to use define-module and

keyword arguments and all of that.

next layers

I mentioned that this tower of terms is incomplete, and so that is one

of the next work items for Hoot: complete support for Guile’s run-time

library. At that point we’d probably want to merge it into Guile, but

that is another topic.

But let’s leave that for another day; until then, happy hacking!

Many years ago, when working at Xamarin, where we were building cross-platform

libraries for mobile developers, we wanted to offer both 2D and 3D gaming

capabilities for our users in the form of adding 2D or 3D content to their

mobile applications.

Many years ago, when working at Xamarin, where we were building cross-platform

libraries for mobile developers, we wanted to offer both 2D and 3D gaming

capabilities for our users in the form of adding 2D or 3D content to their

mobile applications.