I use time machine because it’s an awesome backup program. However, I don’t really trust hard drives that much, and I happen to be a bit of a file system geek, so I backup my laptop an iMac to another machine that stores the data on ZFS.

I first did this using Netatalk on OpenSolaris, then OpenIndiana, and now on SmartOS. Netatalk is an open source project for running AFP (Apple Filesharing Protocol) services on unix operatings systems. It has great support for new features in the protocol required for Time Machine. As far as I’m aware, all embedded NAS devices use this software.

Sometimes, Time Machine “eats itself”. A backup will fail with a message like “Verification failed”, and you’ll need to make a new one. I’ve never managed to recover the disk from this point using Disk Utility.

My setup is RaidZ of 3 x 2TB drives, giving a total of 4TB of storage space (and 2TB redundancy). In the four years I’ve been running this, I have had 3 drives go bad and replace them. They’re cheap drives, but I’ve never lost data due to a bad disk and having to replace it. I’ve also seen silent data corruptions, and know that ZFS has corrected them for me.

Starting a new backup is a pain, so what do I do?

ZFS Snapshots

I have a script, which looks like this:

ZFS=zones/MacBackup/MattBookPro

SERVER=vault.local

if [ -n "$1" ]; then

SUFFIX=_"$1"

fi

SNAPSHOT=`date "+%Y%m%d_%H%M"`$SUFFIX

echo "Creating zfs snapshot: $SNAPSHOT"

ssh -x $SERVER zfs snapshot $ZFS@$SNAPSHOT

This uses the zfs snapshot command to create a snapshot of the backup. There’s another one for my iMac backup. I run this script manually for the ZFS file system (directory) for each backup. I’m working on an automatic solution that listens to system logs to know when the backup has completed and the volume is unmounted, but it’s not finished yet (like many things). Running the script takes about a second.

Purging snapshots

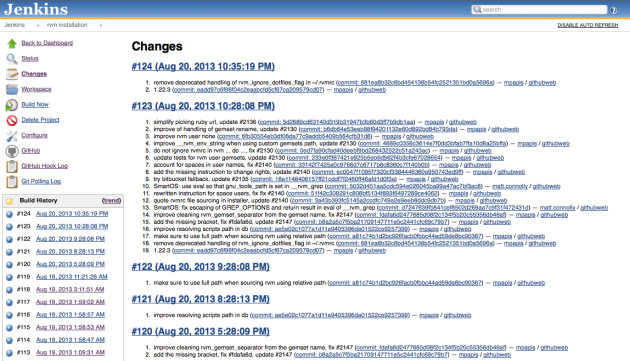

My current list of snapshots looks like this:

matt@vault:~$ zfs list -r -t all zones/MacBackup/MattBookPro

NAME USED AVAIL REFER MOUNTPOINT

zones/MacBackup/MattBookPro 574G 435G 349G /MacBackup/MattBookPro

...snip...

zones/MacBackup/MattBookPro@20131124_1344 627M - 351G -

zones/MacBackup/MattBookPro@20131205_0813 251M - 349G -

zones/MacBackup/MattBookPro@20131212_0643 0 - 349G -

The used at the top shows the space used by this file system and all of its snapshots. The used column for the snapshot shows how much space is used by that snapshot on its own.

Purging old snapshots is a manual process for now. One day I’ll get around to keeping a snapshots on a rule like time machine’s hourly, daily, weekly rules.

Rolling back

So when Time Machine goes bad, it’s as simple as rolling back to the latest snapshot, which was a known good state.

My steps are:

- shut down netatalk service

- zfs rollback

- delete netatalk inode database files

- restart netatalk service

- rescan directory to recreate inode numbers (using netatalks “dbd -r ” command.)

This process is a little more involved, but still much faster than making a whole new backup.

The main reason for this is that HFS uses an “inode” number to uniquely identify each file on a volume. This is one trick that Mac Aliases use to track a file even if it changes name and moves to another directory. This concept doesn’t exist in other file systems, and so Netatalk has to maintain a database of which numbers to use for which files. There’s some rules, like inode numbers can’t be reused and they must not change for a given file.

Unfortunately, ZFS rollback, like any other operation on the server that changes files without netatalk knowing, ends up with files that have no inode number. The bigger problem seems to be deleting files and leaving their inodes in that database. This tends to make Time Machine quite unhappy about using that network share. So after a rollback, I have a rule that I nuke netatalk’s database and recreate it.

This violates the rule that inode numbers shouldn’t change (unless they magically come out the same, which I highly doubt), but this hasn’t seemed to cause a problem for me. Imagine plugging a new computer into a time machine volume, it has no knowledge of what the inode numbers were, so it just starts using them as is. It’s more likely to be an issue for Netatalk scanning a directory and seeing inodes for files that are no longer there.

Recreating the netatalk inode database can take an hour or two, but it’s local to the server and much faster than a complete network backup which also looses your history.

Conclusion

This used to happen a lot. Say once every 3-4 months when I first started doing it. This may have been due to bugs in Time Machine, bugs in Netatalk or incompatibilities between them. It certainly wasn’t due to data corruptions.

Pros:

- Time Machine, yay!

- ZFS durability and integrity.

- ZFS snapshots allow point in time recovery of my backup volume.

- ZFS on disk compression to save backup space!

- Netatalk uses standard AFP protocol, so time machine volume can be accessed from your restore partition or a new mac – no extra software required on the mac!

Cons:

- Effort – complexity to manage, install & configure netatalk, etc.

- Rollback time.

- Network backups are slow.

As time has gone on, both Time Machine and Netatalk have improved substantially. And I’ve added an SSD cache to the server, and its is swimmingly fast and reliable. And thanks to ZFS, durable and free of corruptions. I think I’ve had this happen only twice in the last year, and both times was on Mountain Lion. I haven’t had to do a single rollback since starting to use Mavericks beta back around June.

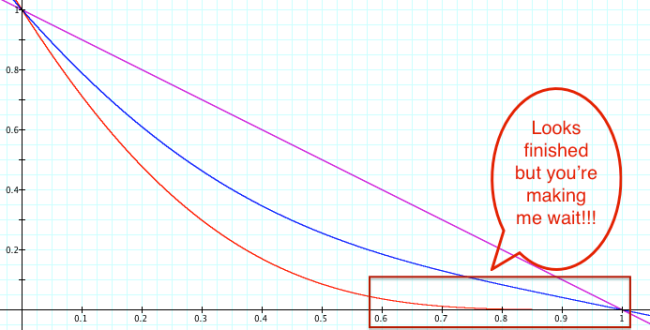

I’d still like to see a faster solution, and I have a plan: a network block device.

This would, however, require some software to be installed on the mac, so it may not be as easy to use in a disaster recover scenario.

ZFS has a feature called a “volume”. When you create one, it appears to the system (that’s running zfs) as another block device, just like a physical hard disk, or file. A file system can be created on this volume which can then be mounted locally. I use this for the disks in virtual machines, and can snapshot them and rollback just as if they were a file system tree of files.

There’s an existing server module that’s been around for a while: http://nbd.sourceforge.net

If this volume could be mounted across the network on a mac, the volume could be formatted as HFS+ and Time Machine could backup to it using local disk mode, skipping all the slow sparse image file system work. And there’s a lot of work. My time machine backup of a Mac with a 256GB disk creates a whopping 57206 files in the bands directory of the sparseimage. It’s a lot of work to traverse these files, even locally on the server.

This is my next best solution to actually using ZFS on mac. Whatever “reasons” Apple has for ditching them are not good enough simply because we don’t know what they are. ZFS is a complex beast. Apple is good at simplifying things. It could be the perfect solution.